Finally, Useful Science!

MIT Researchers finally found a way to split spaghettis in only two pieces

There's a twist, but pasta will never be the same again.

MIT Researchers finally found a way to split spaghettis in only two pieces

There's a twist, but pasta will never be the same again.

Quaternion from 2 vectors shows you how to use the formulas we all had to learn at some point to make computations easier on the processor.

"I don't like maths, my brain overheats"

(Almost every student)

If they put pixels on a screen, programs need geometry and trigonometry. Deal with it.

My field is full of buzzwords. After I attempted a somewhat comprehensible explanation of what ML is and why it works for certain types of tasks, I had multiple conversations with people asking for simplistic renderings of other buzzwords. The ML piece was a decent amount of work, so forgive me, but I'll go shorter this time.

Ah the cloud. Everything should be in the clouds, except my head when it's tax time.

You probably have a computer on a desk somewhere. If that computer is running something 24/7 like keeping your movie or music libraries safe, and that content is accessible outside of the computer (on your tablet, tv box, whatever), then that computer is a server. Because it serves content to outside machines.

If that server isn't physically on your desk, but in a room with better internet connection, like, say, a data center, it's a remote server. And if you don't even know for a fact that it is a physical machine in a physical location that you can go and poke, it's the cloud.

"The cloud" is flowery language for "a server that has all the functionnality of a PC, but probably isn't a physical machine"

OK, first of all, VR isn't new. We've had (bad, admittedly) VR headsets for at least 30 years if not more. It's just that the quality is now bearable due to technological advances.

So, what's the deal with all those AR/VR things?

VR is virtual reality, meaning it should hide the real world and put you in a virtual one. VR headsets encompass your whole vision, usually has sound as well, and completely cuts you off. The advantage is that it removes the distractions, making it (a lot) more immersive. You are in that cockpit. Yes, the movie you are watching is projected on a screen that's 4 times the size of your tv (to your senses at least).

The downside, of course, is that it hides the real world. You can potentially bang knees or elbows on stuff you can't see, and you can't really interact with your significant other when watching the movie.

That's where AR comes in. It's about augmented reality. It just puts virtual stuff on top of real stuff. If you've ever seen a heads-up display, it's that kind of stuff on steroids. You can add text bubbles, images, or 3d objects that will stay in place relative to the real world, but that you can only see through AR devices.

You know all those spy gadgets that put a picture of the perp in the corner with a ton of information on them, but without blocking the view because it might kill the hero? AR.

Back the the fantastic world of servers and websites and... web. Big Data is about what's on the can: big amounts of data.

How big, you ask? well, usually, we are talking billions of data points, with the probable intent of feeding it to a Machine Learning algorithm.

So what's the issue with that and why is it separate from regular data, or databases?

There are two main issues when you look at data: structure and size. And it would be fine, if there weren't any connections between the two. But, when you deal with Big-Data-Ish kind of problems, it looks like this:

Basically, you have 3 data sources (visits on the website, visits on the blog, and tweets) that don't necessarily contain the same type of data, and a problem where you'd like to find out new data out of this (when I tweet, I get 200% increase in traffic).

As you can imagine, it doesn't fit in a regular spreadsheet program, you have to do some voodoo to extract or modify data to find matches between the various sources, without losing the original data.

So yea, it's big. More like the sea is big, than a person is big.

The blockchain craze. The most fantastic adoption of nerd stuff by non-tech people since the MCU. What is a blockchain and what does it have to do with money?

I'll go super simplistic here, there are people who write primers all day long on the topic. The important thing to understand is that blockchain is a mathematical/computer science concept, and that it is used in various "crypto" currencies (they aren't crypted at all, btw) to legitimize certain aspects of the currency.

The blockchain is a block chain. Done. Nah, just kidding. It's a list of data chunks linked together in a very special way.

Let's imagine a simple scenario

I have an afternoon in front of me and my 3 nephew/nieces want to secure some time on that calendar.

So the big one comes and asks me for 2h. I say yea, sure, no problem. Then the middle one comes and also asks for 2h. That still works. So now I have 4h promised to the kids. Then the little one comes and asks for 2h as well. It doesn't fit, right? So we have to change the slots a bit. How do we do that in a way that won't get someone screaming they've been ripped off?

Let's look at the transactions:

The blockchain is kind of like that, a series of transactions, each one "signed" and "verified" through cryptostuff rather than honor.

It is already in use, and quite efficiently as you can imagine, for contracts. Everyone agrees on things, you can add things to it later, and no takebacks that aren't validated in the chain. It's a "secure" and "safe" record of the actions that went on that list.

What does it have to do with money? Nothing, but it can have something to do with value.

Let's say I start a new company that I'm sure will make plenty of money. The thing is, I don't have the seed money. What I could do, is sell you a promise to pay you back when I do have the money. And you get that promise for XXX spacebucks, and you want to give half of it to your spouse. So we use a blockchain to make sure we keep track of all the modifications that were made and who owns a bit of my promise. Seems familiar?

What does it have to do with mining?

OK, so, I don't think I'll surprise anyone by saying that something is roughly as "secure" as the time it takes to crack it open. The lock on the shed has a "security" of 0.1s, the lock on my car maybe 5s, the lock on my door, let's say 5 minutes.

A lock in cryptostuff is a super hard mathematical function that takes a while to run, but that's easy to verify. If I say A=1, B=2, etc... and I tell you that my password has the sum 123456789, it's easy to verify, but it's going to be hard(ish) to find my password from the number because there's a lot of combinations of letters that have that sum.

The problem is, nowadays, computers can compute trillions of operations per second. So finding that password is going to be fairly easy. So we have to use a more complicated function. Then computers catch up and we use a more complicated function again.

So "mining" is actually performing those super hard functions to ensure that the signatures are valid. It takes time and effort (on the computer's part), so the "miner" is rewarded with a percentage of the promise as well, for services rendered. And it's added to the ledger.

When you chain all these things together, whether you participated in "mining", or offered something in exchange of a bit of that promise, or you were given a bit of that promise, you have the "guarantee" that some day, down the line, you'll get 0.0X% of that promise, and that has some value.

That's why cryptocurrencies are speculative in nature. Currenly, the only tangible value anyone's put on the chain is the energy and time needed to "mine" the signatures. How valuable is that? That's up to every participant to decide.

It's very hard to escape a few buzzwords in our field, and at the moment ML is one of those words, in conjunction with things that smell kind of where ML would like to go (looking at you "AI").

This week's WWDC was choke full of ML tools and sessions, and their Apple's Core ML technology is really impressive. But ML isn't for everything and everyone (looking at you, "blockchain"), and I hope that by explaining the principles under the hood, my fellow developers will get a better understanding of how it works to decide by themselves when and how to use it.

Let's get back to school maths for a sec. I will probably mistype a coupla formulas, but here goes.

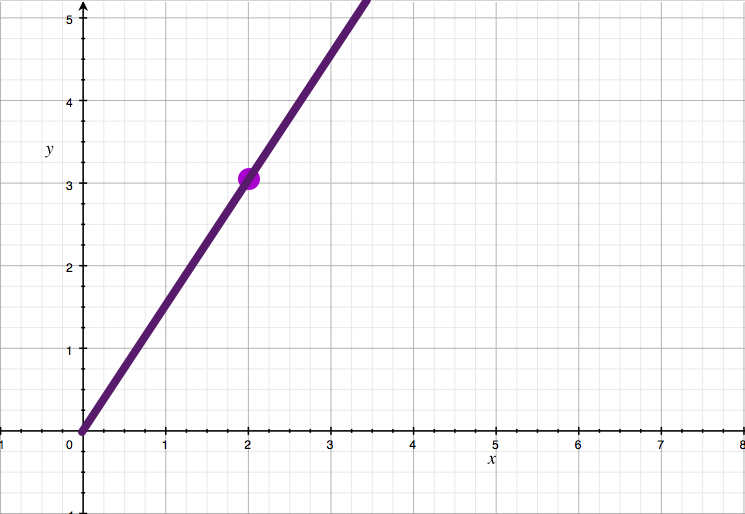

Let's say I have a point in a plane and I want to figure out what the line going through that point P and the origin is.

Easy problem right? It's just y = (P.y/P.x) * x

Similarly, most of us still know that the line going through 2 points P and Q on a plane has the equation: y = (Q.y-P.y)/(Q.x-P.x) * x + c where c is a monstrosity that's easy to calculate but has a terrible form (it's (Q.x-P.x)*P.y + (P.y-Q.y)*P.x).

We tend to remember the slope part but not the constant part because 1/ it's usually more useful to know, and 2/ once you figure this out, you have y = a*x + c, and you just have to plug the coordinates of one of the two points to calculate it, so it's "easy". Just sayin.

Anyways, if I give you two points and I tell you that this is how things go, you can use that line to extrapolate new information.

Let's say I tell you that the grade of your exam is function of your height. If you know that this 1.70m person had 17 and that this 1.90m person had 19, you can very easily figure out what your own grade will be. This is called a predictive model. I'll introduce a couple more terms while I'm at it: whatever feeds into the model is a feature (your height), and whatever comes out is a label (your grade).

Let's get back to the dots and lines.

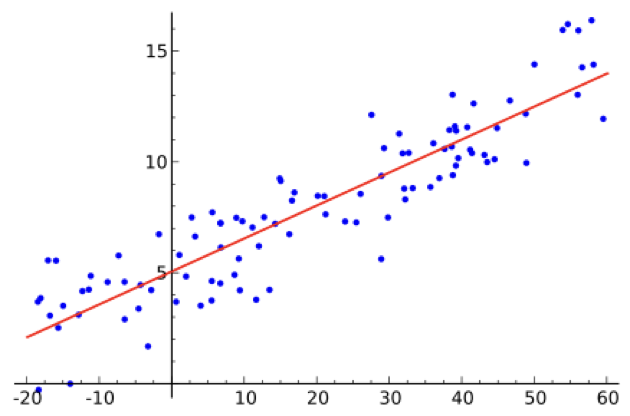

What's the equation of a line that goes through 3 points? 99.999999999% of the time, it will be a triangle, not a line. If you have hundreds or thousands of points, the concept of a line going through all of them becomes completely absurd.

So, ok, let's change tacks. What is the line that fits most of the data? In other words, what's the line that is as close as possible to all of the points?

OK, this is the part where it gets a bit horrendous for us developers, and I will expand on it afterwards.

You can compute the shortest distance between a point and a line using the following:

line: A*x + B*y + C = 0

point: (m,n)

distance: | A*m + B*n + C | / √(A²+B²)

(code-ish): abs(A*m + B*n + C) / sqrt(A*A+B*B)

Yes, our friend Pythagoras is still in the building. I'll leave the explanation of that formula as homework.

So you can say that the total error is the sum of all the distances between your prediction (the line) and the data (the points), or the average distance. Because it's computationnally super expensive, in ML we tend to use the distance on only one of the axises... axisis... axs... the coordinates in the system x, or y, depending on the slope. Then we square it, to emphasize the points that are super far from the line while more or less ignoring the ones close to the line.

Once we have the error between the prediction and the actual data, we tweak a bit A, B and C and we compute the error again. If it makes the error go down, we twiddle in the same direction again, if not, we twiddle in the other direction. When the error doesn't change much anymore, we have found a local minimum to the error, and we have a line that's not bad, all things considered. Remember this, it will be important later.

There's a lot of maths about local minima vs global minima, how to twiddle the parameters right, how to do the same thing in more dimensions (your height and your age and the length of your hair factor in your grade), and not trying to fit a line, but a parabola (x²) or a more generic polynomial function, etc etc etc, but to discuss the principle of the thing and its limitations, simple linear regression (the thing we just saw) is enough to get the point across. If you use more complicated maths, you'll end up having a more complicated line equation, and a more complicated error calculus, but the steps will remain the same.

Based on that very very short summary of what we are trying to do, two problems should jump out of the page:

If the data doesn't fit neatly on a vaguely recognizable shape, there are two options: there is no correlation or you got the data wrong.

Think back to the grading system from earlier. Of course this isn't a good grading system. And if by some freak accident my students find a correlation between their height and their grade, I will be crucified.

The actual job of a data scientist isn't to look at a computer running ML software that will spit out magic coefficients for lines. It's to trawl for days or weeks in data sets that might eventually fit on a somewhat useful predictive model. The canonical example is the price of a house. Yes it's dependant on the size of the house, but also the neighborhood, and the number of bathrooms, and the sunlight it gets etc... It never fits perfectly with x money per 1 m². But if you tweak the parameters a bit, say by dividing the size by the number of rooms, you might end up with something close enough.

As developers, this is something we tend to shun. Things have value x, not weeeeeell it's kind of roughly x, innit?. But real life isn't as neat as the world inside a computer, so, deal with it.

That's why data scientists get mucho dineros, because their job is to find hidden patterns in random noise. Got to be a bit insane to do that, to begin with. I mean, isn't it the most prevalent example of going crazy in movies? Sorry, I digress.

That's why sane (and good) data scientists get много рублей.

It seems that most of what "Machine Learning" is about is fairly simple maths and the brain power of prophets. Well it is and there's nothing new to these methods. We've been using them in meteorology, economics, epidemiology, genetics, etc, since forever. The main problem lied in the fact that all those calculations took ages. In and of themselves they aren't hard. A reasonably diligent hooman can do it by hand. If we had an infinite amount of monkeys... no, wrong analogy.

So... what changed?

In a word: parallelization. Our infrastructures now can accomodate massively parallel computation, and even our GPUs can hold their own on doing simple maths like that at an incredible speed. GPUs were built to calculate intersections and distances between lines and points. That's what 3D is.

Today, on the integrated GPU of my phone, I can compute the average error of a model on millions of points in a few seconds, something that data scientists even 20 years ago would do by asking very nicely to the IT department for a week of mainframe time.

Everything is numbers. It just depends on what you look at and how.

An image is made of pixels, which are in turn made of color components. So there's some numbers for ya. But for image recognition, it's not really that useful. There are a number of ways we can identify features in an image

(a dark straight line of length 28 pixels could be one, or 6 circles in this image), and we just assign numbers to them. They become our features. Then we just let the machine learn that if the input is that and that and that feature, we tend to be looking at a hooman, by twiddling the function a lil bit this way or that way.

Of course, the resulting function isn't something we can look at, because it's not as pretty or legible as y = a*x + b, but it's the exact same principle. We start with a function that transforms the image into a number of mostly relevant features, that feeds that combination of values to a function that spits out it's a hooman or it's not a hooman.

If the result isn't the right one, maybe we remove that feature and start again... the fact that there are 6 circles in that image isn't correlated to the fact that it's a hooman at all. And we reiterate again, and again, and again, and again, until the error between the prediction and the reality is "sufficiently" small.

That's it. That's what ML is about. That's all Machine Learning does today. It tries a model, finds out how far from the truth it is, tweaks the parameters a bit, then tries again. If the error goes down, great, we keep going in that direction. If not, then we go the other way. Rince, repeat.

You can see why developers a bit preoccupied about performance and optimizations have a beef with this approach. If you do the undirected learning (I won't tell you if it's a line, or a parabola, or anything else, just try whatever, like in vision), it's a horrendous cost in terms of computing power. It also explains why, in all the WWDC sessions, they show models being trained offline, and not learning from the users' actions. The cost of recomputing the model every time you add a handful of data points would be crazy.

It also helps to look at the data with your own eyes before you go all gung-ho about ML in your applications. It could very well be that there is no possible way to have a model that can predict anything, because your data doesn't point at any underlying structure. On the other hand, if what you do is like their examples, about well-known data, and above all mostly solved by a previous model, you should be fine to just tweak the output a bit. There's a model that can identify words in a sentence? We can train a new model that builds upon that one to identify special words, at a very low cost.

Just don't hope to have a 2s model computation for a 1000 points dataset on an iPhone SE, that's all.

Something to remember is that marketing people love talking about "AI" in their ML technobabble. What you can clearly see in every session led by an engineer is that they avoid that confusion as best as they can. We don't know what intelligence is, let alone artificial intelligence. ML does produce some outstanding results, but it does so through a very very stupid process. But the way Machine Learning works today can't tackle every problem under the sun. It can only look at data that can be quantified (or numberified for my neology-addicted readers), and even then, that data has to have some sort of underlying structure. A face has a structure, otherwise we wouldn't be able to recognize it. Sentences have structures, most of the time, that's how we can communicate. A game has structure, because it has rules. But a lot of data out there doesn't have any kind of structure that we know of. I won't get all maudlin on you and talk about love and emotions, because, well, I'm a developer, but I'm sure you can find a lot of examples in your life that seem to defy the very notion of causality (if roughly this, then probably that), and that's the kind of problem ML will fail at. It may find a model that kinda works some of the time, but you'd be crazy to believe its predictions.

If you want to dig deeper, one of the best minds who helped give birth to the whole ML thing has recently come out to say that Machine Learning isn't a panacea.

As developers, we should never forget that we need to use the right tools for the job. Hammers are not a good idea when we need to use screws.

I have been following the sessions from the depth of my couch, living with a 9h time difference with everything around me, but it's always worth it: the field we are in evolves and mutates all the time, and it would be ridiculous to think that I'm done with my learning.

I always have mixed feelings regarding the conference itself: I was there when it was in San Jose before it was in San Francisco. I've been there since, multiple times. I see a mutation in my field that doesn't completely jive with me, but I understand why this is. We are hip now. It comes with benefits (I don't have to lie about my job anymore), and with disadvantages (people will say they belong to my tribe, because it's cool).

All of that to say that I will never make fun of someone who says they don't know how to do X in code. If you have a very long bathroom break, I recommend this explanation. Coding, programming, developping, it's hard. You've got to translate vaguely human demands into a logic that's definitely machinic. When you don't know how to do X, you have to learn it and that is fine.

However, the ratio of learned vs learners people has definitely shifted in the past few years. WWDC was a thing that you did if you knew that coding would be your life, and quite a huge investment of time, energy and neurons. I remember sitting for lunch (yes, there was seated lunch with all the attendees, and we'd compare scars and anecdotes, discuss issues with frameworks freely with engineers that weren't really supposed to disclose so much information, because we all knew that we were in the same boat) with the people who fricking wrote the Java Virtual Machine, one engineer from SETI who just released SETI@Home, and feeling very very junior. But they didn't exclude me from the conversation, and before long, the age difference was forgotten, and we were simply discussing (calmly) the advantages and disadvantages of various programming models. Spending a week at WWDC would usually overload my brain something fierce, on top of the physical exertion. I wouldn't be able to talk to non-coders for a while after that, because my brain was so full of things to try, answers to my questions, cool new things to read about, and a fistful of business cards.

From afar, and from the conversations I can have with people who come back from their migration, it seems to me that the networking has taken over and that the technical gets pushed to the labs, where you can ask questions relevant to your problem secretly, kind of, to experts from within the Fruit Company. The sessions are nice, and you can tell the presenters would looooooooooove to go into more details and geek out on specifics. But they can't because the sessions have to be 40 minutes and digestible by an audience that is potentially less invested in the minutiae.

All in all, it seems that it's less about code and more about The Message. You pull a probably very competent engineer from their lair, who is probably very interested in what they present. You put them in front of other engineers (probably) very interested in what they're about to talk about and have a million questions. And you make sure that they can't geek out about details and subtelties.

That's for later. That's for when you bump into the presenter in the hall, or when you go to the labs. So if your question is of any interest to me, or if I have the same one, I will never get the answer.

I know I sound like a bittervet. But I can't conceive my field without the built-in drive to explore and learn and dig deep that I see kind of regressing. Like on Stack Overflow, where there used to be super technical and alien questions, with threads going deep into architectures, I have the feeling that the vast majority of the open topics now are mundane, and relatively simple. It's all about the job. "How do I do X?", not "OK, I can do X that way, and it kind of works, but I'm looking for better alternatives".

That being said, the session which blew me away and was by far the most entertaining in a "you won't find that tutorial in page 1 of the documentation kind of way" was Chris Miles's advanced debugging techniques. It's quirky, it's fun, it mixes languages and techniques and dives deep into how the thing works under the hood. And it emphasizes curiosity and using your brain more than copy-pasting.

Anyways, back to work, lots of new technologies to investigate in my (non-existant) free time!